Please take a moment as you read this toolsmith to honor those lost in the Oso, WA landslide disaster and those who have lost loved ones, friends, and homes. Pro Civitas et Patria.

Prerequisites/dependencies

Windows for Oryon C Portable

Mac OS X or Windows for WhiteHat Aviator

Introduction

Spring is upon us and with April comes a focus on Security and Cloud Computing in the ISSA Journal and as such a focus on security-centric Chromium-based web browsers in toolsmith. It also freaks me out just a bit to say this but with April also comes the 90th consecutive toolsmith. I sure hope you enjoy reading it as much as I do writing it; it’s been a fabulous seven year plus journey so far.

Those of you who enjoy the benefits of rich web content, fast load times, and flexible browser extensibility have likely tried or use the Chrome browser. What you may not be aware of is that there are other Chromium-based browsers that are built with a bit more attention to privacy than might be expected from Chrome proper.

Full disclosure right up front: as a reminder, I work for Microsoft, and the one thing this article won’tbe is any kind of a knock on Google Chrome privacy posture or a browser comparison beyond these two Chromium variants. There are plenty of other battles to fight than one in the Browser Wars. We will however have a usability and features-based discussion on Oryon C Portable, an OSINT-focused browser built on the SRWare Iron version 31.0.1700.0 of Chromium, and WhiteHat Aviator, also Chromium based. Note that Chromium, no matter the variant, includes sandboxing which has obvious security advantages.

Oryon C Portable is a web browser designed to assist researchers in conducting Open Source Intelligence (OSINT) investigations, with more than 70 pre-installed tools, while WhiteHat Aviator describes itself the “best and easiest way to bank, shop, browse, and use social networks while stopping viruses, advertisers, hackers, and cyber-crooks.”

According to Marcin Meller of OSINT Insight, the next version of Oryon C will be named Oryon C OSINT Framework and will be based on their own build of Chromium. They’ve made some changes to the tool sets and information sources. While there will be a few new interesting solutions, they also managed to reduce features that proved to be unnecessary. The browser will be lighter, clearer, and more effective, and the new version will offer a cross-platform support including Windows, Linux, and Mac OS X along with a special edition of Oryon F based on the Mozilla source code, specifically for Firefox enthusiasts. These new releases should appear online sometime this summer at the latest. Marcin says that thanks to great feedback from users, including some excellent OSINT specialists, they are highly motivated to make Oryon an even more solid and powerful tool. The active users are the strength of this project, thus, Marcin invites everyone to share their experiences and support Oryon.

When I pinged Jeremiah Grossman, now WhiteHat’s CEO, he reminded me that Robert ‘RSnake’ Hansen, VP of WhiteHat labs, leads the Aviatorproject. Ah, the fond memories of April Fools’ Day past (5 years ago now) and the birth of the Application Security Specialist (ASS) certification. Jeremiah is the master of April Fools’ mayhem. It’s not often that you get the opportunity for a photo opp with both Jeremiah and RSnake, but if you’re wearing your ASS shirt at the BlueHat conference, you just might.

|

| FIGURE 1: Robert, Russ, and Jeremiah: certified |

Robert filled me in in the Aviator project: “WhiteHat Aviator started off being a more private and secure browsing option for our own internal users. It has morphed into being a consumer product (Mac and Windows) that has additional and originally unforeseen merits. For instance, it is significantly faster due to having no ads, and by virtue of making Flash and Java a "click-to-play" option. Users on GoGo inflight wireless love it, because it makes the web usable over latent connections, not to mention it uses less power on your laptop. We are giving the browser away for free for now, and all users who download it will be grandfathered in, but in the future we will charge for the browser to ensure that our interests are aligned with the user and to help pay for development without requiring us to steal personal information from our users. ;-) We will quite possibly be the first browser with tech-support!”

Both of the browsers offer the added benefit of enhanced privacy but serve rather different purposes, so let’s explore each for their strengths.

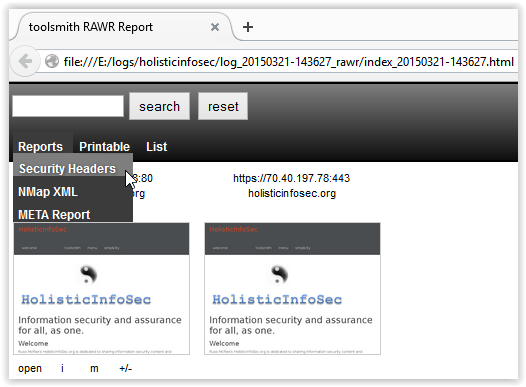

Oryon C Portable

OSINT fans rejoice, there’s a browser dedicated to your cause! Oryon includes more than 70 pre-installed tools, more than 600 links to specialized sources of information and online investigative tools, additional privacy protection features, and a ready-to-use OPML file containing a sorted collection of information sources specific to OSINT, Intelligence, InfoSec, defense, and more. Oryon C Portable is also quite literally…portable. You can run it from all sorts of USB and optical media. I’ll pause for a second so you can take in all the glorious OSINT power at your fingertips as seen in Figure 2.

|

| FIGURE 2: Revel in the OSINT majesty |

You can manage the Oryon C tools from the, yep, you guessed it, the Oryon C tool button. As you do so you’ll see the related button appear on the toolbar and a popup notice that the extension has been enabled. From the same tools button as seen in Figure 3 you can open the full tools menu to create extensions groups and search/sort your extensions.

|

| FIGURE 3: Enable Oryon tool families |

|

| FIGURE 4: All in one Web Searcher results |

WhiteHat Aviator

For Aviator I thought I’d conduct an interesting study, albeit not following optimal scientific standards.

On a Windows 7 virtual machine, I conducted default installations of Aviator and Chrome and made no setting changes. With no other applications running, and no processes generating any network traffic, I executed the following:

Step 1

1) Started Wireshark

2) Initiated a capture on the active interface

3) Started Aviator

4) Browsed to http://holisticinfosec.blogspot.com

5) Terminated Aviator

6) Stopped Wireshark with 5250 frames captured

Step 2

1) Started Wireshark

2) Initiated a capture on the active interface

3) Started Chrome

4) Browsed to http://holisticinfosec.blogspot.com

5) Terminated Chrome

6) Stopped Wireshark with 5250 frames captured

Step 3

1) Open aviator.pcap in NetworkMiner 1.5 and sorted by Hostname

2) Open chrome.pcap in NetworkMiner 1.5 and sorted by Hostname

3) Compared results

The results were revealing to be sure.

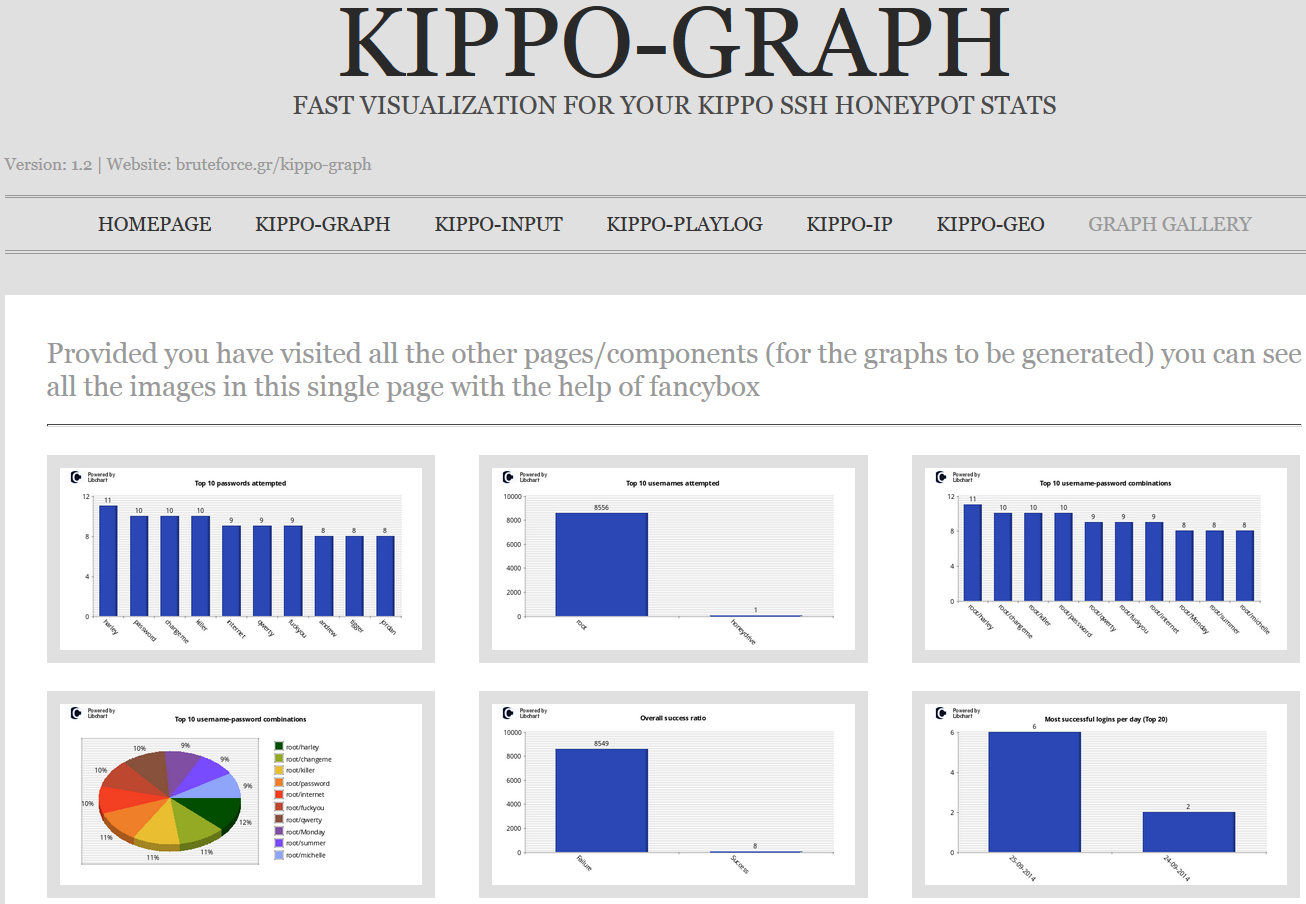

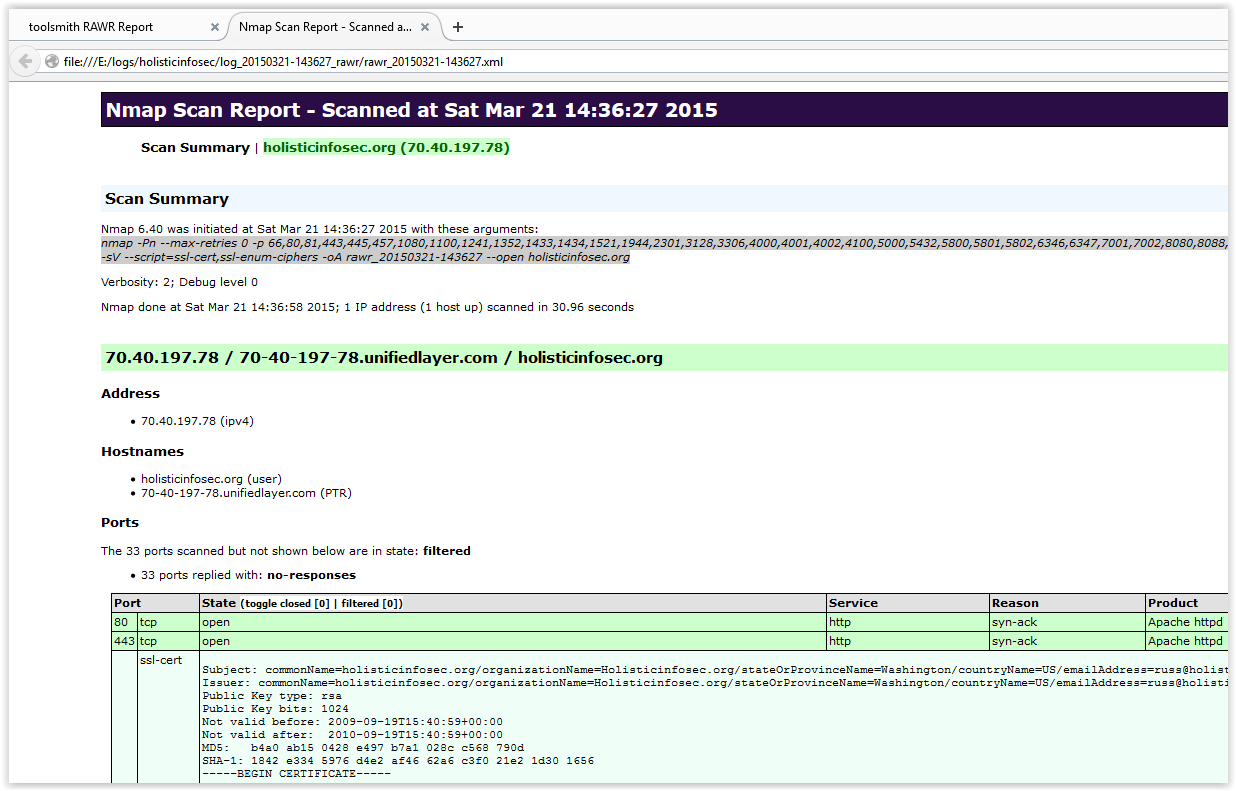

I’m glad to share the captures for your own comparisons; just ping me via email or Twitter if you’d like copies. Notice in Figure 5 the significant differences between counts specific to hosts, files, images, credentials, sessions, DNS, and Parameters.

I’m glad to share the captures for your own comparisons; just ping me via email or Twitter if you’d like copies. Notice in Figure 5 the significant differences between counts specific to hosts, files, images, credentials, sessions, DNS, and Parameters.

|

| FIGURE 5: Comparing the differences between Aviator and Chrome browser session network traffic |

Aviator is significantly less chatty than Chrome.

Supporting statistics as derived from results seen in Figure 5:

120% less host contact in Aviator capture vs. Chrome capture

69% less file interaction (download of certs, gifs, etc.) in Aviator capture vs. Chrome capture

86% fewer images presented (ads) in Aviator capture vs. Chrome capture

63% fewer total sessions in the Aviator capture vs. the Chrome capture

69% fewer DNS lookups in the Aviator capture vs. the Chrome capture

Hopefully you get the point. :-)

These differences between default configurations of Aviator and Chrome are achieved as follows:

Supporting statistics as derived from results seen in Figure 5:

120% less host contact in Aviator capture vs. Chrome capture

69% less file interaction (download of certs, gifs, etc.) in Aviator capture vs. Chrome capture

86% fewer images presented (ads) in Aviator capture vs. Chrome capture

63% fewer total sessions in the Aviator capture vs. the Chrome capture

69% fewer DNS lookups in the Aviator capture vs. the Chrome capture

Hopefully you get the point. :-)

These differences between default configurations of Aviator and Chrome are achieved as follows:

- Aviator's privacy and security safeguards are preconfigured, active and enabled by default

- Aviator eliminates hidden tracking and uses the Disconnect extension to block privacy-destroying tracking from advertisers and social media companies

- WhiteHat is not partnering with advertisers or selling click data

- Unwanted access is prevented as Aviator blocks internal address space to prevent malicious Web pages from hitting your websites, routers, and firewalls

It’s reasonable to ascertain that those with an affinity for strong default privacy settings will favor WhiteHat Aviator given the data noted in Figure 5 and settings provided out of the gate.

In Conclusion

These are a couple of fabulous browsers for your OSINT and privacy/security pleasure. They’re so easy to install and use (I didn’t even include an installation section, no need) that I strongly recommend that you do so immediately.

Take note, readers! July’s ISSA Journal will be entirely focused on the Practical Use of InfoSec Tools. Rather than put up what is usually just me going on about infosec tools, you should too! Send articles or abstracts to editor at issa dot org.

Ping me via email if you have questions or suggestions for topic via russ at holisticinfosec dot org or hit me on Twitter @holisticinfosec.

Cheers…until next month.

Acknowledgements

Marcin Meller, OSINT Insight